1 Part III Markov Chains & Queueing Systems 10.Discrete-Time Markov Chains 11.Stationary Distributions & Limiting Probabilities 12.State Classification. - ppt download

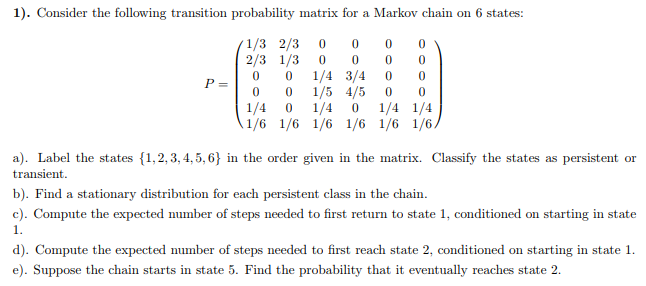

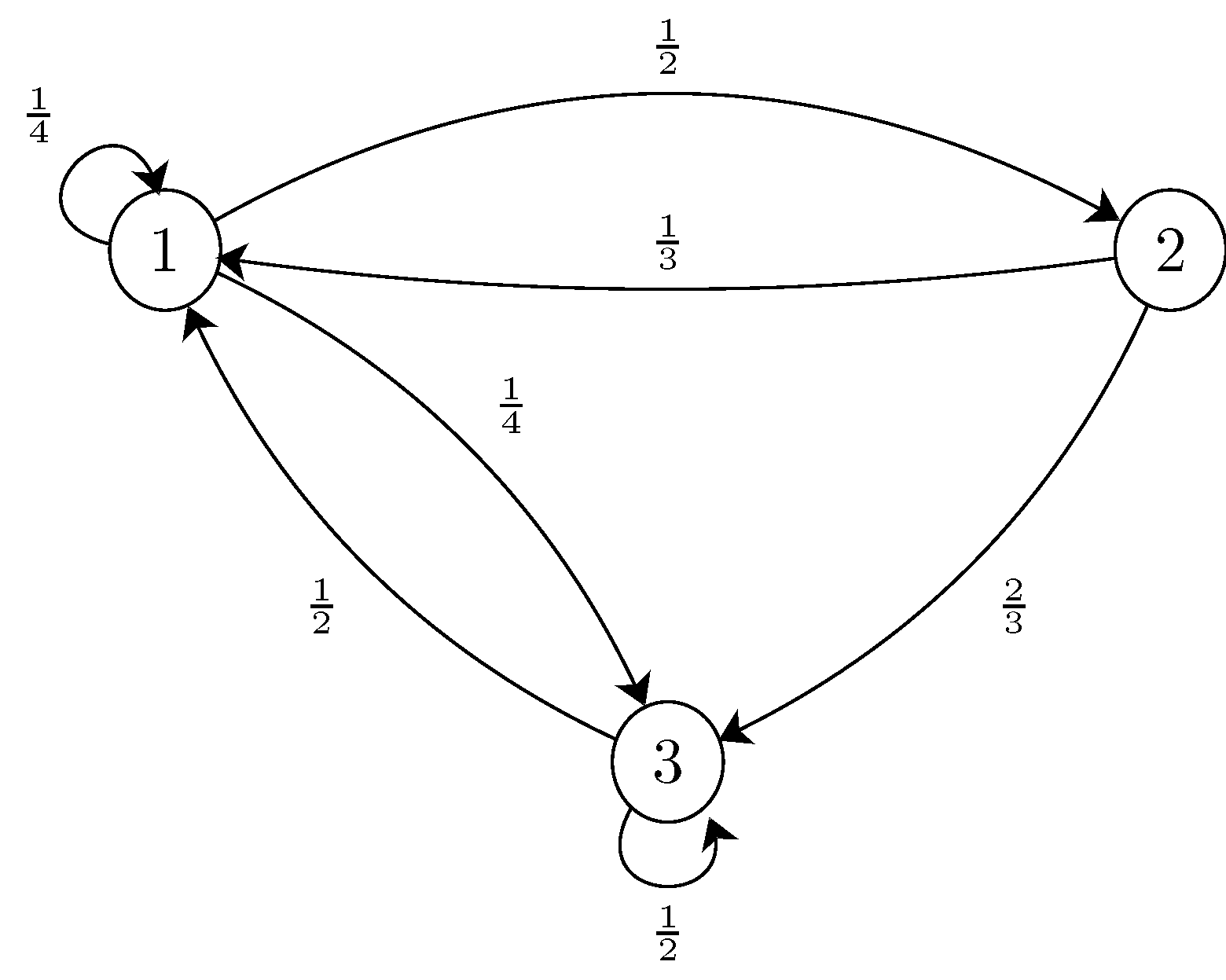

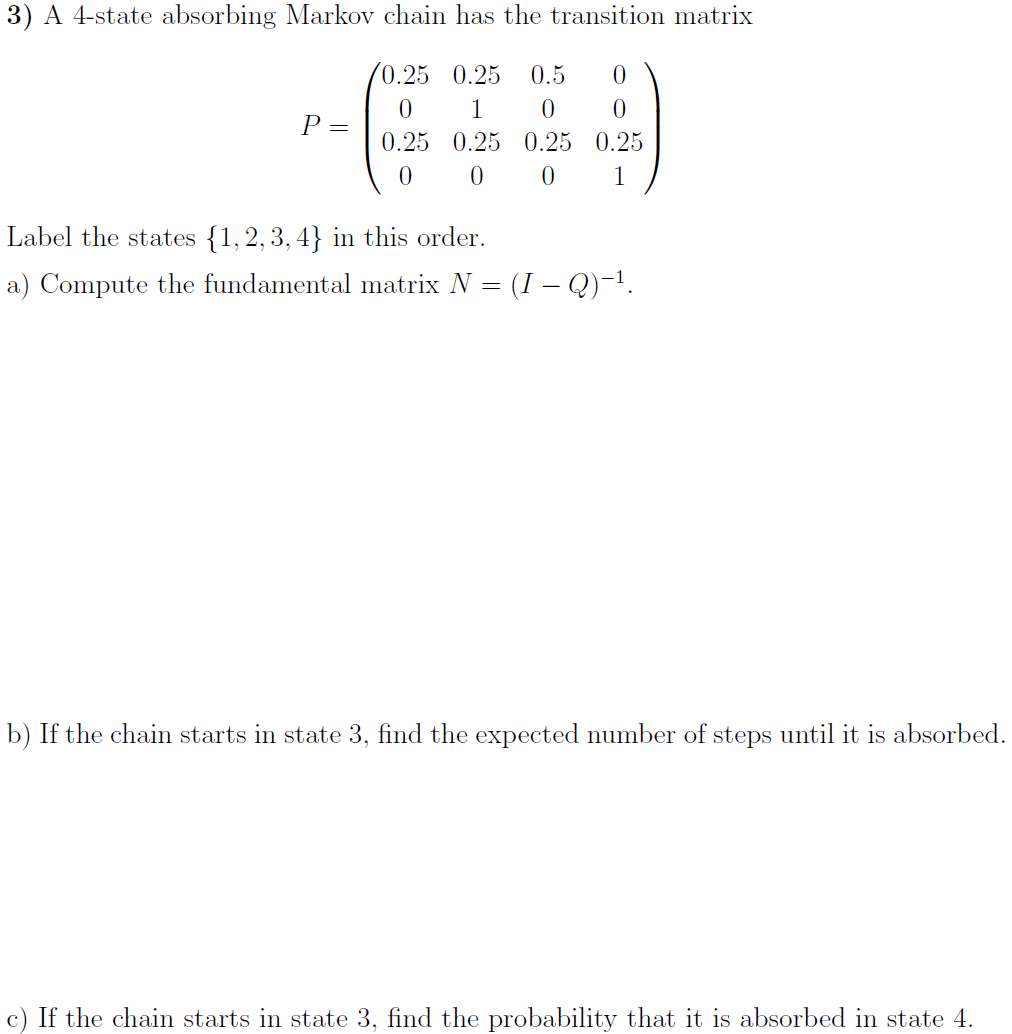

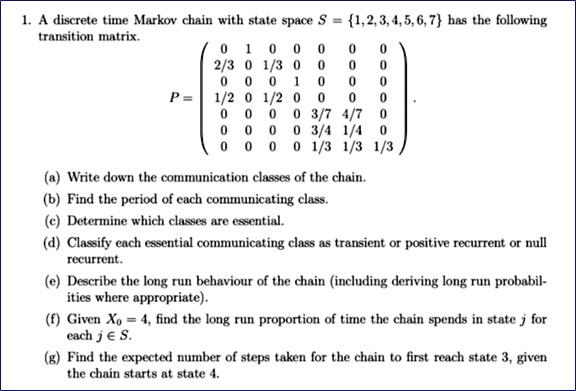

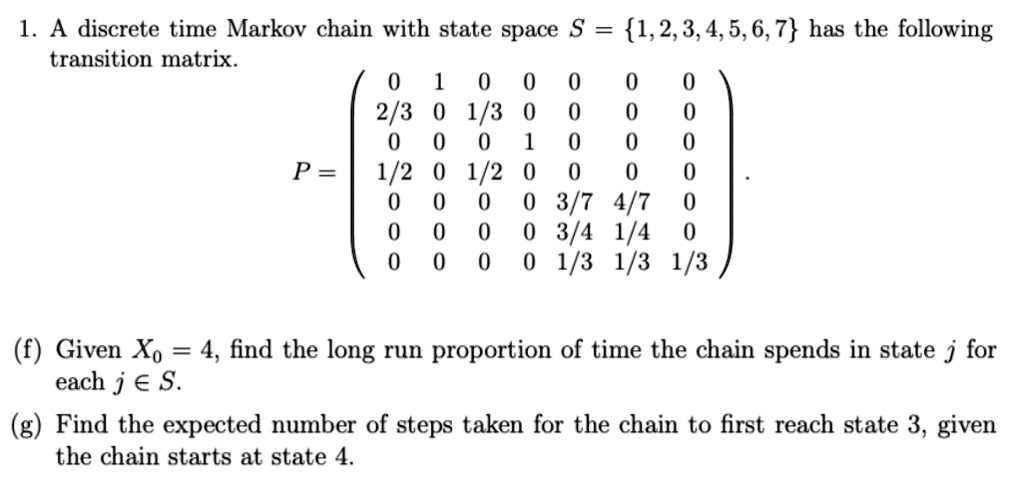

SOLVED: A discrete time Markov chain with state space transition mnatrix. 1,2,3,4,5,6,7 has the following 1/3 1/3 Write down the communication clase of the chain. Find the period of each communicating class.

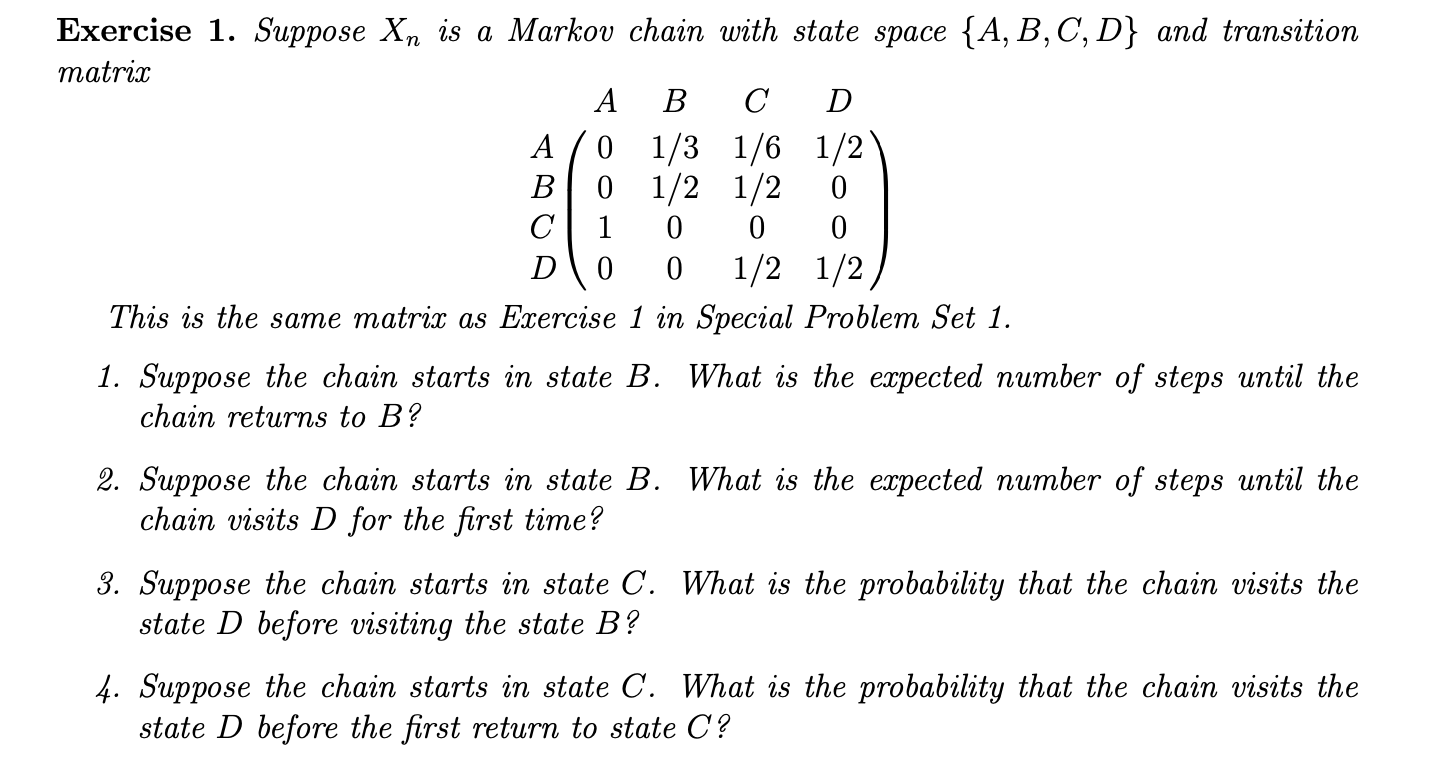

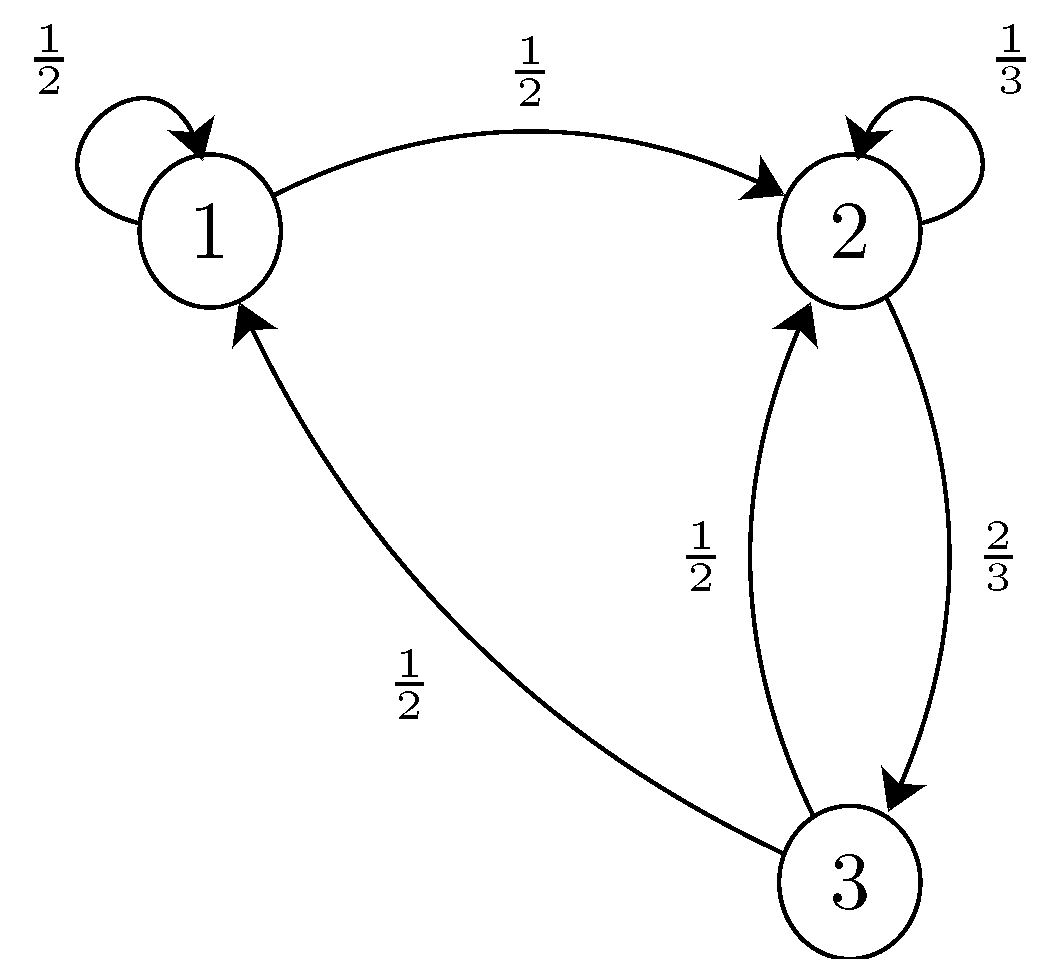

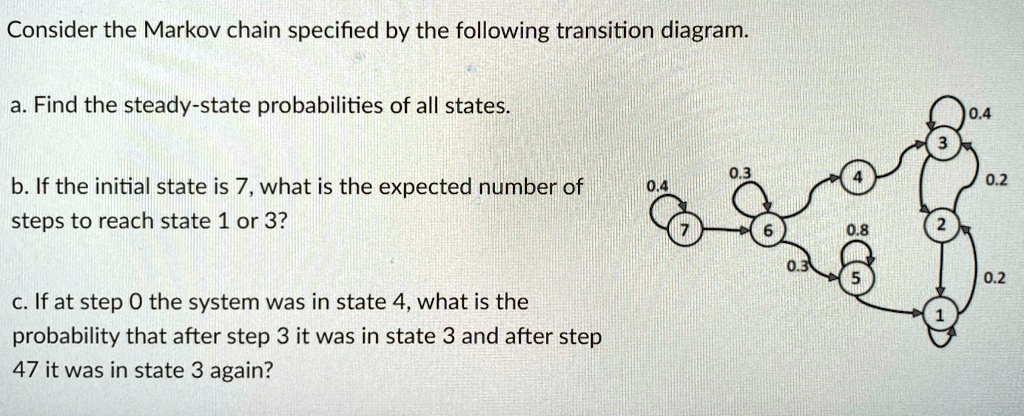

SOLVED: Consider the Markov chain specified by the following transition diagram a. Find the steady-state probabilities of all states b. If the initial state is 7, what is the expected number of

SOLVED: 1. A discrete time Markov chain with state space S 1,2,3,4,5,6,7 has the following transition matrix: 0 0 0 0 0 2/3 0 0 103 0 0 0 0 1 P =